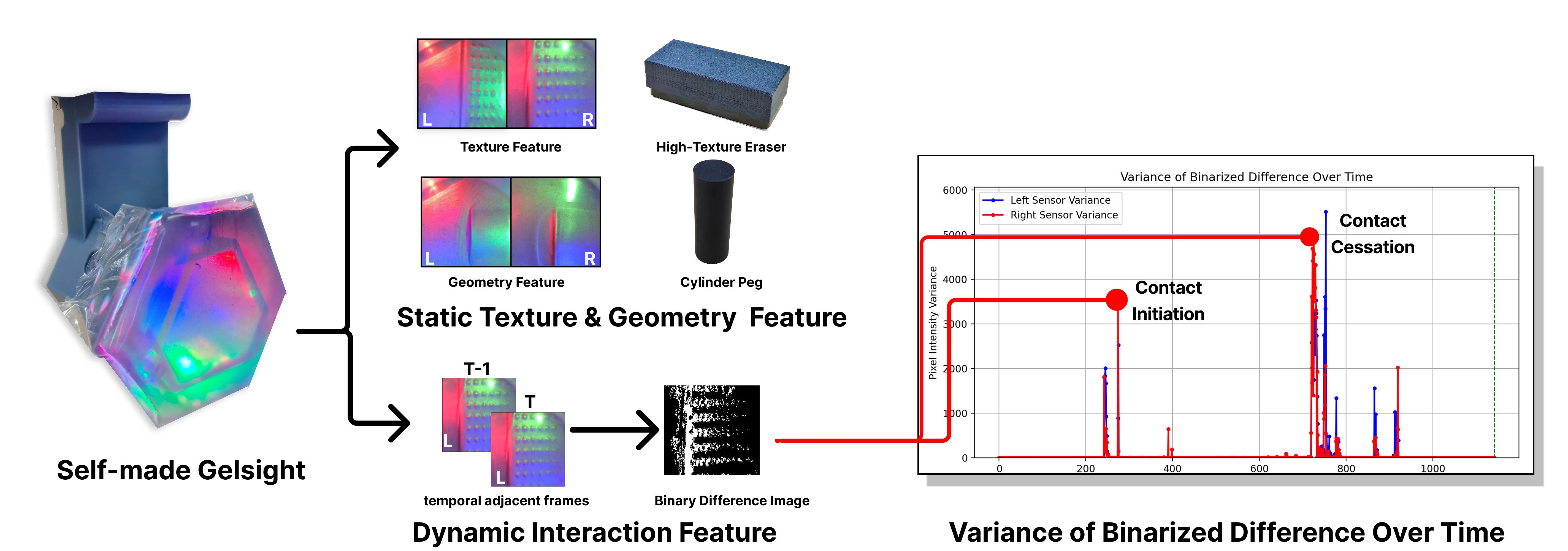

Visuotactile sensing offers rich contact information that can help mitigate performance bottlenecks in imitation learning, particularly under vision-limited conditions, such as ambiguous visual cues or occlusions. Effectively fusing visual and visuotactile modalities, however, presents ongoing challenges. We introduce GelFusion, a framework designed to enhance policies by integrating visuotactile feedback, specifically from high-resolution GelSight sensors. GelFusion using a vision-dominated cross-attention fusion mechanism incorporates visuotactile information into policy learning. To better provide rich contact information, the framework’s core component is our dual-channel visuotactile feature representation, simultaneously leveraging both texture-geometric and dynamic interaction features. We evaluated GelFusion on three contact-rich tasks: surface wiping, peg insertion, and fragile object pick-and-place. Outperforming baselines, GelFusion shows the value of its structure in improving the success rate of policy learning.

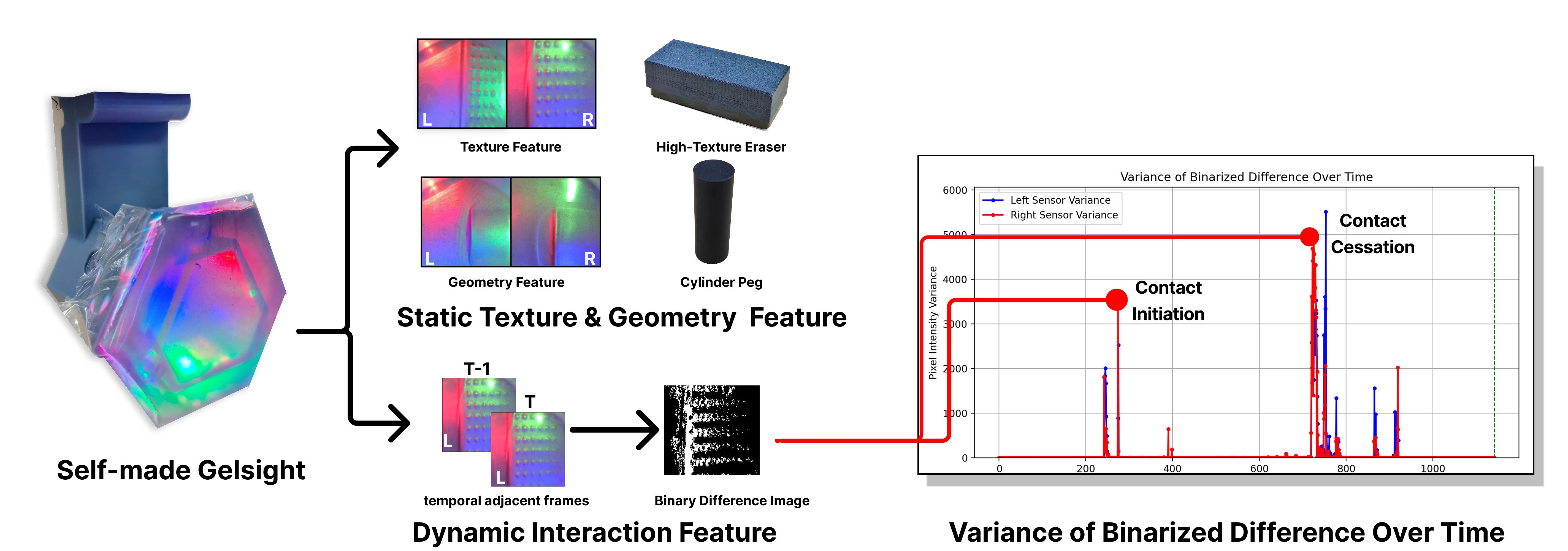

GelSight, a classic high-resolution visuotactile sensor, captures membrane deformation with an internal camera to encode tactile information. provides rich contact information detailing surface properties and interaction dynamics.

To address the fusion challenge, we introduce GelFusion, a vision-led framework ,that integrates visuotactile feedback and vision inputs for policy learning, employing a cross-attention mechanism for vision-dominant cross-modal fusion.

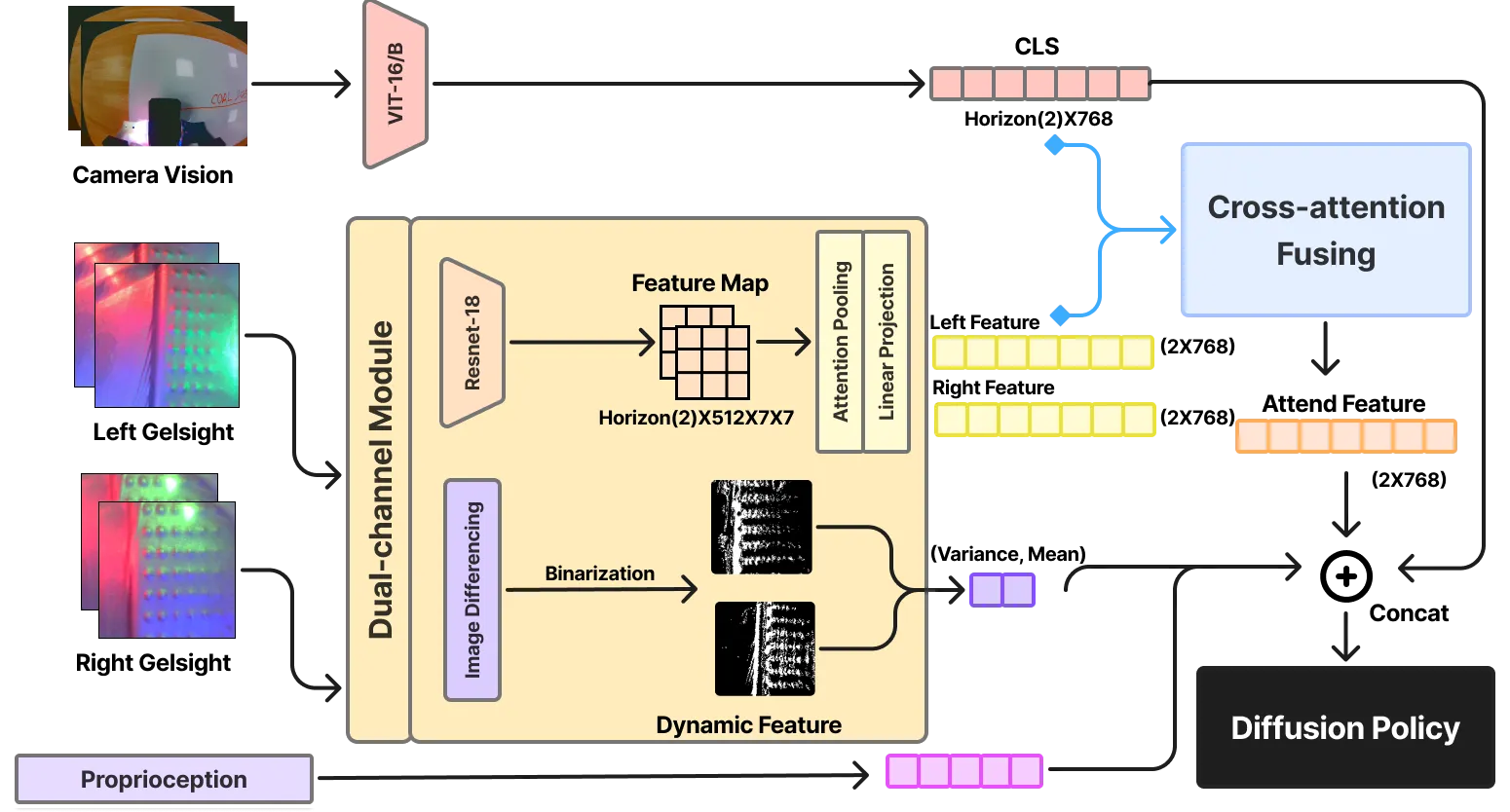

The Cross-attention module fuses visual and tactile information by calculating weights based on the visual query to dynamically emphasize the relevance of each modality’s features. These weighted features are then combined to form a fused representation that guides the diffusion policy.

The experiment evaluated GelFusion’s assistance to robots in surface wiping tasks, specifically addressing vision’s limitations such as depth ambiguity in establishing precise contact and maintaining consistent pressure and orientation for effective cleaning. It comprised two critical contact phases: initially establishing contact from an arbitrary height, followed by maintaining consistent pressure and orientation during the wiping action.

To better handle the writing on the whiteboard in the observed images, we adjusted camera parameters such as exposure to deal with the issue of light reflection.

The peg insertion task, designed to evaluate precise alignment capabilities, primarily challenges robots by simulating vision-limited scenarios, particularly during the final insertion phase where visual occlusion is inherent. This task, comprising critical phases including grasping, transport, alignment, insertion, and release, was evaluated under significant randomization of peg and puzzle box positions and orientations, with distractor holes present, to assess the policy’s robustness and reliance on tactile feedback for success.

The task objective is robotic grasping of highly fragile objects like potato chips. As brittle materials prone to sudden fracture under excessive force, force control is crucial for maintaining theirstructural integrity. This task highlights the limitations of relying solely on wrist-camera vision, which lacks the necessary force-sensitive feedback to prevent such instantaneous damage.

Vision-only policy(Diffusion Policy) often fail, not by crushing the chips, but by applying too little force due to premature grasp timing decisions based on similar visual inputs. GelFusion, with its tactile feedback, ensures accurate force control, enabling successful and delicate handling.

@misc{jiang2025gelfusionenhancingroboticmanipulation,

title={GelFusion: Enhancing Robotic Manipulation under Visual Constraints via Visuotactile Fusion},

author={Shulong Jiang and Shiqi Zhao and Yuxuan Fan and Peng Yin},

year={2025},

eprint={2505.07455},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2505.07455},

}